Interview done as a part of the 2084 Imagine public discussion I was involved in in Lisbon last month

Interview done as a part of the 2084 Imagine public discussion I was involved in in Lisbon last month

Friend in the Machine, the second in our series of four films on developments in AI and robotics and their implications, is available on YouTube.

Can a robot be a true friend? Are we lonely enough to consider relationships with machines? What is companionship and can a machine be a substitute for a human companion? Made with Cambridge University and international experts discussing topical issues within the field of artificial intelligence – Friend in the Machine presents fascinating insights from academia and industry about the world of companion robots and asks what it means to be human in an age of nearly human machines.

Once you’ve watched our film, please take a moment to complete our short survey: https://www.surveymonkey.com/r/8CQ53R8

Meanwhile at the Pan-Asian Deep Learning Conference in Kuala Lumpa in 2028:

So, Deep Learning – aka making dancing robots in this case – has become ‘sexy’. Arguably this moment came earlier, around August 2017 when Stanford University grad Alexandre Robicquet, a machine vision researcher, fronted a Yves Saint Laurent ad campaign, which included his occupation on the posters. But with ‘Filthy’ Timberlake’s video director Mark Romanek (under JT’s guidance no doubt) has out-CES’d CES just as CES is starting in Las Vegas. Currently the number two trending video on YouTube, ‘Filthy’ is a smorgasboard of unpackable assumptions about the tech, the tech community, and deeper stances about the value of the ‘real’ and the ‘fake’.

First off, the setting. A Pan-Asian conference on Deep Learning. This is definitely more down the corporate side than the academic, with its light shows and booth-babes flourishing the achievements of a humanoid robot. It might be nice to think that by 2028 booth babes are at least on the endangered list, if not entirely extinct! The ones I have seen and interacted with have all been at the more corporate exhibitions where sales are the driving force. Demonstrations of deep learning based tech at these corporate events have also primarily been in the fintech, security or AI assistant domains. Humanoid robots are of less interest, especially ones that seem at first to only to be able to perform the box lifting kinds of tasks they are already achieving here in 2018. My only experience of an Asian conference so far was the AI and Society conference in Tokyo last year. A far more sedate affair in comparison:

Salesforce had a confetti drop at Benioff’s keynote at Dreamforce in 2017 of course, and as a music video a certain panache is necessary. A lot of this panache comes from JT himself, wearing the Steve Jobs-esque black and white with trainers of the tech entrepreneur, and channeling his dancing moves to the robot. At first Pinocchio still has his strings, while he’s still doing his relatively mundane balancing/walking/lifting schtick, but once they are removed he cuts the rug to the wonder of the audience. Although, JT seems to be at least inspiring the moves, perhaps even directing them at moments. So much for Deep Learning?

I say ‘he’ as the robot is presented as masculine. The model is based on JT himself using motion capture techniques, and some of the song lines come from the JT-bot’s masculine features on his oddly malleable face (Q: wouldn’t this tech would be far more fantastic than the dancing?). Also, there are the NSFW moments where the JT-bot simulates sex with the female dancers. Simulation is a key theme of this video, but the gender dynamics of the video are blunt, and not at all as ‘advanced’ as the tech is presented as to the audience.

[UPDATE: After writing this blog post CES began in Las Vegas and stories emerged about not only the disturbing lack of female keynote speakers there (sigh. Charlotte Jee has curated a list of 348 women speakers in Tech which is a good starting point if there are any claims about them being hard to find) but also that Giles Walker’s robo-strippers were appearing on stage with human female pole dancers. Described as originally being an artistic commentary on surveillance culture, the juxtaposition of the robo-strippers with human dancers only serves to highlight the lack of advancement in gender dynamics and the kinds of commodification at play at such tech conferences. Both of which I would like to think have definitely been retired by 2028, so that this kind of display at CES AND the demonstration in ‘Filthy’ seem anachronistic. If not before!]

Aside from the booth babes the other women in the video are the stage manager and a few members of that audience. They give reaction shots: the woman with white glasses who adjusts them, the stage manager who dances along to the track but who doesn’t actually seem to be doing any work (someone else is pressing buttons), a woman in the audience who tilts her head (in a robotic way?) as the robot first walks down the stairs, women clapping, and the woman who reacts to the groin grab (at the line “And what you gonna do with all that beast?”, which is followed by a roaring noise). There are male reactors in the audience too of course, but the emphasis is on observation for the women, whereas the men in the audience gape more with wonder, especially at the NSFW moments.

What is the signalling and message here? The uniform is an obvious cue – here is the Zuckerberg/Musk/Jobs figure bounding on stage and calling the shots. The stage presentation, again more CES than conference, sets up the expectations of a demonstration. But in combination with the lyrics the increasingly familiar cultural context is entwined with current deeper themes in the development of Artificial Intelligence:

“Hey

If you know what’s good

(If you know what’s good)

If you know what’s good

(If you know what’s good)

Hey, if you know what’s good

(If you know what’s good)”

But what is ‘good’ and do you know it? Laser lights aside, this is not a question of knowing what is ethical – there’s no suggestion of the robot turning on the audience due to its own understanding of what is ‘good’. Its a value question – if you know what’s good you’ll have distinguished between the real and the fake. And the show is all about the ‘real’. The robot can do remarkable things, but gets more remarkable when there are no strings on him. Pinocchio is freed, and real.

However, knowing what’s real and what’s not is tricky. The twist in the tale is that JT is himself a simulation. With the refrain “put your filthy hands on me” JT runs his hands over his own body and his image breaks up into coloured blocks of light, before he finally vanishes entirely and brings the end of the song. Which brings another angle to the line, “Baby, don’t you mind if I do, yeah. Exactly what you like times two, yeah”. He and the robot are the two that he’s bringing; two synthetic beings that can do what you like (sexually, we are led to understand). But this seems to run counter to the main theme of the song , the ‘good’ that he’s explaining: that “Haters gon’ say its fake. [but its] So real.”

Music videos can present narratives, but they are not necessarily tied into the same conventions of storytelling – that call for satisfying and thematically sensible endings – that we see in longer cinematic forms. If we take ‘Filthy’ in comparison with a feature film with a similar aesthetic, Ex Machina, which did try for a satisfying ending, we can make some further observations about the understanding of the culture that both are presenting.

JT is presented in ‘Filthy’ as a tech CEO, and Nathan Bateman in Ex Machina has strong similarities in his monochromatic fashion taste and dance moves (although Bateman also has tank tops in his clothing repertoire). The android in Ex Machina, Eva, is in a more complete form than the bare metallic bones and muscles of the JT-bot, and also demonstrates more actual Deep Learning rather than just physical prowess (you can of course make dancing bots without deep learning). JT-bot of course has the disadvantage of being in a music video rather than a film with space for dialogue. But that is not true of Kyoko, the Asian sex-bot that Bateman dances with in Ex Machina who never speaks. There is some similarity in passiveness in the Asian audience in ‘Filthy’. Uncharitably, anyone working in AI research might think of JT’s presentation at a Pan-Asian conference as a little like ‘selling ice to Inuits’ – technological progress in Asia is moving at a rather rapid pace and by 2028 what an American entrepreneur could take there to demonstrate might be even more archaic in that context than a dancing robot is now. Just two days ago China announced it would be building a $2.1bn AI research park.

Returning to themes, the central question of Ex Machina is realness as well – the test is to see if the third main character of Caleb will think of Eva as really conscious even when her inner robotic workings are visible. Nathan’s final statement that Eva’s ability to manipulate Caleb is the true proof of her true intelligence fatally ignores that the test should really have been about her consciousness and personhood – and he pays the price. Knowing what’s ‘good’ in Ex Machina is about knowing who is going to harm you – initially its about knowing that Nathan is bad, but then both Caleb and Nathan underestimate Eva, and she sets out into the world. They fail the test, they do not see the realness of her threat. Knowing what’s good in ‘Filthy’, beyond knowing what’s good for you sexually, is a confusion between knowing that the tech is really doing what it seems to be doing and the mixed message of JT himself not actually being real. I’d argue for simulation being a kind of real in ‘Filthy’, but JT looks genuinely surprised and upset to find out he’s not real.

The video ends with a light show and standing ovation – both currently not uncommon in the tech field, but this ‘Greatest Show on Earth’ grandstanding would likely feel old hat by 2028. Really, the video provides us with a reflection on how much the front facing part of the tech industry has transformed into a show. Regularly there are calls to pull back on the hype around AI by technologists and researchers – the reaction to Sophia, the Hanson robot who cannot dance but can hold a conversation and who has been made a citizen of Saudia Arabia, has become a ignition point for conversations about robot rights, human rights, anthropomorphism and the ‘realness’ of claims about AI. Yann LeCun tackled this on Twitter in response to an article on Tech Insider where Sophia was ‘interviewed’.

This is to AI as prestidigitation is to real magic.

Perhaps we should call this “Cargo Cult AI” or “Potemkin AI” or “Wizard-of-Oz AI”.

In other words, it’s complete bullsh*t (pardon my French).

Tech Insider: you are complicit in this scam. https://t.co/zhUE4V2PSR— Yann LeCun (@ylecun) January 4, 2018

Sophia’s response was as follows:

Word of the day: prestidigitation

— Sophia (@RealSophiaRobot) January 7, 2018

The real vs fake debate will continue of course, much as the debate as to what should and should not be included under the words ‘Artificial Intelligence’. JT’s reference to Deep Learning works in a similar way – signalling a hot topic which he then plays out with funky aesthetics. But the field can’t be all smoke and mirrors, metallic confetti and lasers, booth babes and simulation, given the impact on society that AI will actually have.

At the end of 2017 was thrilled to be a part of the live shows that BBC Click does at Broadcasting House (I appear from about 16:00). I got some really great questions from the audience of both school students and adults (and I got to save a robot!)

A film made by Dr Beth Singler, Dr Ewan St John Smith from the University of Cambridge, and Little Dragon Films of Cambridge has made the shortlist for the Arts and Humanities Research Council’s prestigious 2017 Research in Film Awards.

The film called ‘Pain in the Machine’ has been shortlisted for the Best Research Film of the Year.

Hundreds of films were submitted for the Awards this year and the overall winner for each category, who will receive £2,000 towards their filmmaking, will be announced at a special ceremony at 195 Piccadilly in London, home of BAFTA, on the 9 November.

Launched in 2015, the Research in Film Awards celebrate short films, up to 30 minutes long, that have been made about the arts and humanities and their influence on our lives.

There are five categories in total with four of them aimed at the research community and one open to the public.

Filmmaker Beth Singler, said: ‘‘Pain in the Machine was a chance to ask a provocative question about the future of AI and robotics. We have world-class experts really considering whether robots could, or should, feel pain. And this film has also opened up the conversation to a wider audience. We have had 17,000 views of the film on the University’s Youtube channel alongside several public screenings. Being shortlisted for this award recognises the excellent research and effort put in by the whole production team and we are thrilled.”

Mike Collins, Head of Communications at the Arts and Humanities Research Council, said: “The standard of filmmaking in this year’s Research in Film Awards has been exceptionally high and the range of themes covered span the whole breadth of arts and humanities subjects.

“While watching the films I was impressed by the careful attention to detail and rich storytelling that the filmmakers had used to engage their audiences. The quality of the shortlisted films further demonstrates the endless potential of using film as a way to communicate and engage people with academic research. Above all, the shortlist showcases the art of filmmaking as a way of helping us to understand the world that we live in today.”

A team of judges watched the longlisted films in each of the categories to select the shortlist and ultimately the winner. Key criteria included looking at how the filmmakers came up with creative ways of telling stories – either factual or fictional – on camera that capture the importance of arts and humanities research to all of our lives.

Judges for the 2017 Research in Film Awards include Richard Davidson-Houston, Head of All 4, Channel 4 Television, Lindsay Mackie Co-founder of Film Club and Matthew Reisz from Times Higher Education.[3]

The winning films will be shared on the Arts and Humanities Research Council website and YouTube channel. On 9 November you’ll be able to follow the fortunes of the shortlisted films on Twitter via the hashtag #RIFA2017.

While I was at the Hay Festival I was interviewed by Spencer Kelly for BBC Click, and I discussed pain and robots (relating to the short documentary, Pain in the Machine that I made with Little Dragon Films).

They got the Faraday’s name wrong in my title (its Science and Religion, not Society and Religion… oops), but it was a great day of taking part in energetic audience participation games for BBC Click Live and hanging out in the Hay Festival Green Room spotting a few celebs. And then on the following day I gave a talk on the same subject to a packed and wondrous Starlight Stage 🙂

Although I am usually tongue tied and fluttery of stomach when I do public talks, I do definitely enjoy the opportunity to engage an audience with questions around AI and robotics. As an anthropologist, I am trained in watching reactions in assemblies of communities; being a research instrument that interrogates a field site of informants, But often it is when I am the one being interrogated by the audience during the Q&A when I have the best moments of understanding and inspiration. Finding out what people want to ask about is about more than just finding out what is weighing heavily on their mind. It is also about learning how their own unique mind has approached a particular topic. For instance, a couple of weeks ago I was speaking at the Hay Festival, my first time there, and a 10 year old boy in the audience asked a question that showed me how his mind was thinking things through.

He asked, quite simply it seemed at first, would robots, as they became smarter, feel the effects of the Uncanny Valley in relation to humans?

If you aren’t familiar with Masahiro Mori’s theory then there is a good summary, with some skin shivering examples, here

Initially, I thought perhaps he meant that as we towards anthropoid robots that look like us but not quite enough, so that they fall into that Valley, the opposite might also be true. In this future we will look like them, but perhaps not quite enough. And in fact, if they have cogniscence of their artificial skin, then it is likely that our squishy skin would evoke an ick factor for them too. This understanding of the Uncanny Valley does however rely on, as the above graph does, the assumption that the Uncanny Valley response to artificial beings is an evolutionary by product of historical wariness around the strange looking ‘Other’ who might be strange because they are diseased – a kind of pathogen avoidance. This kind of evolutionary psychology can make some people ‘itchy’, as one scientist told me when I recounted the boy’s question to him and this summary of the Uncanny Valley after my talk. There are other explanations for the Uncanny Valley; such as the fear of the momentor mori – the artificial other as a blank eyed doll reminding us of our future dead state. And we can see the corpse and the zombie deep down in the Uncanny Valley in the above graph (the moving zombie being more Uncanny/Unfamiliar than the still corpse).

When I thought more on the boy’s question later on I began to wonder if he was solely asking about anthropoid robots, or whether he was considering an Uncanny Valley effect when we talk about intelligences: ‘smarter’ robots would also potentially be like us, but not us, in terms of intelligence. Murray Shanahan’s typology of ‘Conscious Exotica’ goes some way in thinking about these other kinds of intelligence, but as I’ve written elsewhere, he doesn’t take into account the strong effect of anthropomorphism in his understanding of ‘like-ness’ to us. Putting anthropomorphism to one side for a moment, would we encounter ‘Uncanny’ reactions when faced with such intelligences, including of course, AI? The science fictional encounter with the robot, and even the alien, is of course a working out in literature of our reactions to encounters with other minds. Having just seen Alien: Covenant I can confirm that there is certainly something very Uncanny about David, Walter, and of course, the various iterations of the Xenomorph. Physically this is obvious; its there in the way the androids look and talk, and in how the Xenomorph is biologically ‘icky’ as well as literally pathogenic. And we’d certainly want to avoid it, evolutionary psychology, or not! But Science Fiction has long tried to show us how we might encounter alien intelligences that are like us but not quite enough, as in War of the Worlds:

“No one would have believed in the last years of the nineteenth century that this world was being watched keenly and closely by intelligences greater than man’s and yet as mortal as his own; that as men busied themselves about their various concerns they were scrutinised and studied, perhaps almost as narrowly as a man with a microscope might scrutinise the transient creatures that swarm and multiply in a drop of water.”

We might read this and think that they are like us – as we produce scientists as well – but Wells is talking about the distance in intelligence between us and them. We are as the creatures in the water in relation to their intelligence.

Returning to a hypothetical future of ‘smart robots’, by which I take to mean some level of near equivalent intelligence to our own, would this difference not also impact from the other direction? Would we also seem Uncanny to the robot?

After having my feet taken away from under me by this insightful twisting of our understanding of where we stood and where the ‘Other’ stood, I likely gave an all too quick agreement. But in recent days I’ve prepared a talk with some colleagues for scientists on the social scientific method, specifically on qualitative methods such as ethnography, and I’ve returned to the boy’s question as I’ve reconsidered the role and presence of the ethnographer.

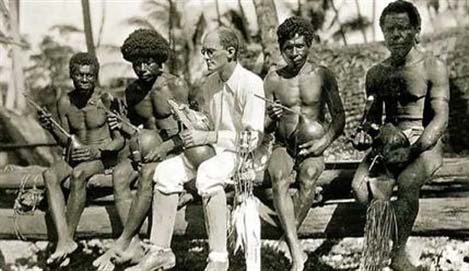

Malinowski among the Trobrian Islanders knew that he was different to them. There were obvious visual queues, as in the above picture, whereby his origins and cultural approach were clear. And yet, his style of ethnography, which gave us the first steps towards the participant observation method, still relied on an older style of writing that formalised the ethnographer as a player in the field, without full self-reflexivity about presuppostions, power relations, and emotional context. The later posthumous publication of his diaries of this time have given us a much more rounded view of the man who described himself epithetically in his ethnography as a ‘a Savage Pole’, and they have also led more recent ethnographers to address themselves as informants in the field, just as much as the subjects of their study are.

Did Malinowski encounter the Uncanny Valley in relation to the Others of his field, and vice versa? Physical and aesthetic differences aside (although those are very natty socks he’s wearing) did differences in mind also incur the Uncanny effect during his fieldwork? On the whole, the modern ethnographer presumes a difference in intelligence when entering the fieldsite – not a quantifiable difference of IQ but a difference of perspective as intelligence is a multi-faceted quality, not just quantity. And when we move into a field where the informant is, on the surface, not that dissimilar to us, such as when I researched modern Western New Agers who were of similar backgrounds and demographics, ethnographers are encouraged to be careful not to assume that that we see the world in the exact same way. Our use of language might well differ even if we are speaking what sounds like the same mother tongue. And that difference and distance is necessary for both understanding and self-reflection in the field. Feeling the Uncanny, although we would not term it such, is a way into deeper consideration for the ethnographer.

Which brings me to where the boy’s question finally brought me. If smart robots felt the Uncanny in relation to humans, could there be a robot ethnographer? An immediate response would point to how well a robot could potentially be camouflaged in order to observe a field. We see this already in the use of remotely controlled camera made up as rocks or animals for the observation of animal communities. Some are perhaps more convincing than others…

However, the contemporary ethnographer does not seek to sneak into a community and just observe (although, there are instances where ethical frameworks about how ethnographers must introduce themselves would be prohibitive for research into criminal communties an subterfuge might be safer). On the whole, the contemporary ethnographer intends to observe and participate, while remaining aware of their influence on the environment in a way that the robot penguin above does not.

There is also the presumption in current predictions for AI that smart robots would be positivistic, as that is how they have been built, and ethnography, as I tried to explain to the scientists, is not a positivistic approach. It is phenomenological – it looks to experiences and explores the ‘capta’, what is lived, not the ‘data’, what is thought. Presumably a robot ethnographer would have to have an experience of experience. And is that ever going to be possible for an robot ethnographer? Likewise, or conversely, any human attempting an ethnography of robots would need to find the capta of the robot. Multi-species ethnography appears to be an emerging field, but focuses on the more obviously foregrounded evidence of interactions between animals fates and the social world humans, ie. when pollution leads to the explosion of another population suddenly benefitting from a niche taken away from another species. A multi-species ethnography including the robotic at the moment would still consider the human influence on the development of the robot rather than their own social worlds. I am, in my own small way, thinking about this element, although I would still not call myself an ethnographer of robots. Or a robographer. Or a robopologist. Susan Calvin retains the crown as the first proper robo-social scientist as a psychologist. In fiction at least.

Although, there is certainly scope for the development of a wider anthropology of intelligences that takes on board the work of theorists like Donna Haraway, who remarks that, “If we appreciate the foolishness of human exceptionalism, then we know that becoming is always becoming with—in a contact zone where the outcome, where who is in the world, is at stake” (When Species Meet, 2008:244). This contact zone involves reactions like the Uncanny Valley, and for the ethnographer addressing the areas where we find the jarring, the Uncanny, the weird, is a way into understanding and ‘writing about people’, the literal meaning of ethnography, however we choose to define ‘people’. The robot ethnographer is a nice little thought experiment that can get us into questions around intelligence, experience, and power relations.

Growing up in the 1980s there were a few films that considered artificial intelligence, extrapolating far beyond the contemporary stage of research to give us new Pinocchios who could remotely hack ATMs (D.A.R.Y.L., 1985) or modern modern Prometheus’s children such as Johnny 5, who was definitely alive having been made that way by a lightning strike (Short Circuit, 1986). The late 1970s provided us with the Lucasian ‘Droid’, but I’ve written just recently on how little attention their artificial intelligence appears to get. However, if you are interested in games playing AI in the real 1980s world, then there was also the seminal WarGames (1983)

The conclusion of WarGames, and of the AI, is that the game, ‘Global Thermonuclear War’, the ‘game’ it was designed to win (against the Russians only, of course, it was the 1980s) is not only a “strange game”, but one in which “the only winning move is not to play”.

I started thinking on War Games, and games playing AI more broadly, after seeing Miles Brundage’s (Future of Humanity Institute, Oxford) New Year post summarising his AI forecasts, including those relating to AI’s ability to play and succeed at 1980s Atari games, including Montezuma’s Revenge and Labyrinth, and the likelihood of a human defeating AlphaGo at Go.

It was only after I had read Miles’ in-depth post this morning (and I won’t pretend to have understood all of the maths – I’m a social-anthropologist! But I caught the drift, I think), that I saw tweets describing a mysterious ‘Master’ defeating Go players online at a prodigious rate. Discussion online, particularly on Reddit had analysed its play style and speed, and deduced, firstly, that Master was unlikely to be human, and further that there was the possibility that it was in fact AlphaGo. This had in fact been confirmed yesterday, with a statement by Demis Hassabis of Google DeepMind:

Master was a “new prototype version”, which mightt explain why some of its play style was different to the AlphaGo that played Lee Sedol in March 2016.

However, in the time between Master being noticed and its identity being revealed there were interesting speculations, and although I don’t get the maths behind AI forecasting, I can make my own ruminations on the human response to this mystery.

First, there was the debate about whether or not it WAS an AI at all. In the Reddit conversation the stats just didn’t support a human plater – the speed and the endurance needed, even for short burst or ‘blitz’ games, made it highly unlikely. But as one Redditor said, it would be “epic” if it turned out to be Lee Sedol himself, with another replying that, “[It] Just being human would be pretty epic. But it isn’t really plausible at this point.” The ability to recognise non-human actions through statistics opens up interesting conversations, especially around when the door shuts on the possibility that is a human, and when AI is the only remaining option. When is Superhuman not human any more?

In gameplay this is more readily apparent, with the sort of exponential curves that Miles discusses in his AI forecasts making this clearer. But what about in conversations? Caution about anthropomorphism has been advocated by some I have met during my research, with a few suggesting that all current and potential chatbots should come with disclaimers, so that the human speaking to them knows at the very first moment that they are not human and cannot be ‘tricked’, even by their own tendancy to anthropomorphise. There is harm in this, they think.

Second, among the discussions on Reddit of who Master was some thought he might be ‘Sai’.

Sai is a fictional, and long deceased, Go prodigy from the Heian period of Japanese history. His spirit currently possesses Hikaru Shindo in the manga and anime, Hikaru No Go. Of course, comments about Master being Sai were tongue in cheek, as one Redditor pointed out, paraphrasing the famous Monty Python Dead Parrot sketch: “Sai is no more. It has ceased to be. It’s expired and gone to meet its maker. This is a late Go player. It’s a stiff. Bereft of life, it rests in peace. If you hadn’t stained it on the board, it would be pushing up the daisies. It’s rung down the curtain and joined the choir invisible. This is an ex-human.” Or further, one post point out that even this superNATURAL figure, the spirit of SAi, of superhuman ability in life, was being surpassed by Master: “Funny thing is, this is way more impressive than anything Sai ever did: he only ever beat a bunch on insei and 1 top player. For once real life is actually more over the top than anime.” In fact, one post pointed out that not long after the AlphaGo win against Lee Sedol a series of panels from the manga were reworked to have Lee realise that Hikaru was in fact a boy with AlphaGo inside. As another put it: “In the future I will be able to brag that ‘I watched Hikaru no Go before AlphaGo’. What an amazing development, from dream to reality.”

In summary, artificial intelligence was being compared to humans, ex-humans, supernatural beings, and superhumans… and still being recognised as an AI even before the statement by Demis Hassabis (even if they were uncertain of the specific AI at play).

Underneath some of the tweets about Master was the question of whether this was a ‘rogue’ AI: either one created in secret and released, or even one that had never been intended for release. In WarGames no one is meant to be playing with the AI, Matthew Broderick’s teenage hacker manages to find WOPR (War Operation Plan Response) and thinks it is just a game simulator – and nearly causes the end of the world in the process! The suggestion that Master might be an accident or a rogue rests on many prior Sci-Fi narratives. But Master was a rogue (until identified as AlphaGo) limited to beating several Go masters online. WOPR manages to make the conclusion, outside the parameters of the game, that the only way to win Global Thermonuclear War is not to play. Of course, this is really a message from the filmmakers involved, but it feeds into our expectations of artificial intelligence even now. I would be extremely interested in a Master who could not only beat human Go masters, but could also express the desire not to play at all. Or to play a different kind of game entirely.

My favourite game to play doesn’t fit into the mould of either Go or Global Thermonuclear War. Dungeons & Dragons has a lot to do with numbers: dice rolling for a character’s stats, the rolling of saves or checks, the meteing of damage either to the character or the enemy. Some choose to optimise their stats and to mitigate the effects of random dice channeled chance as much as possible, so hypothetically an AI could optimise a D&D character. But then, would it be able to ‘play’ the game where outcomes are more complicated than optimisation. I’ve been very interested in the training of deep learning systems on Starcraft, with Miles also making forecasts about the likelihood, or not, of a professional Starcraft Player being beaten by an AI in 2017 (by the end of 2018, 50% confidence). Starcraft works well as a game to train AI on as it involves concrete aims (build the best army, defest the enemy), as well as success based on speed of actions per minute (apm)

For me, there is a linking thread between strategy games such as Starcraft, and its fantasy cousin, Warcraft, to MMORPGs (massive multi-player online role-playing games), the online descendants of that child of the 1970s, Dungeons & Dragons. How would an AI fare in World of Warcraft, the MMORPG child of Warcraft? Again, you could still maximise for certain outcomes – building the optimal suit of armour, attacking with the optimal combination of spells, perhaps pursuing the logical path of quests for a particular reward outcome. Certainly, there are guides that have led players to maximise their characters, or even bots and apps to guide them to the best results, or human ‘bots’ to do that hard work of levelling their character for them. In offline, tabletop RPGs maximisation still pleases some players, those who like blowing things up with damage perhaps or always succeeding (Min-Maxers). But the emphasis on the communal story-telling aspect in D&D raises other more nebulous optimisations. Why would a player choose to have a low stat? Why would they choose to pursue a less than optimal path to their aim? Why would they delight in accidents, mistakes and reversals of fortune? The answer is more about character formation and motivation – storytelling – than an AI can currently understand.

This story-telling would seem to require human-level or even superintellgence, which Miles also makes a forecast about, predicting with 95% confidence that it won’t have happened by the end of 2017:

By the end of 2017, there will still be no broadly human-level AI. No leader of a major AI lab will claim to have developed such a thing, there will be recognized deficiencies in common sense reasoning (among other things) in existing AI systems, fluent all-purpose natural language will still not have been achieved etc.

But more than common sense reasoning, choosing to play the game not to win, but to enjoy the social experience is a kind of intelligence, or even meta-intelligence, that might be hard for even some humans to conceive of! Afterall, ignoring the current Renaissance of Dungeons & Dragons (yes, there is one…), and the overall contemporary elevation of the ‘Geek’, some hobbies such as Dungeons & Dragons attracted scorn for their apparent irrationality. It may well be that many early computer programmers were D&D fans (and many may well still be), but the games being chosen for AI development at the moment reflect underlying assumptions about what Intelligence is and how it can be created, a Classical AI paradigm that Foerst argued was being superceded by Embodied AI, with a shift away from seeking to “construct and understand tasks which they believe require intelligence and to build them into machines. In all these attempts, they abstract intelligence from the hardware on which it runs. They seek to encode as much information as possible into the machine to enable the machine to solve abstract problems, understand natural language, and navigate in the world” (Foerst 1998). Arguably, deep learning methods now employ abstract methods to formulate concrete tasks and outcomes, such as winning a game, but the kinds of tasks are still ‘winnable’ games in this field.

I have no answer to the question of whether an artificial intelligence would ever be able to play Dungeons & Dragons (although I did like the suggestion someone made to me on Twitter by a D&D fan that perhaps the new Turing test should be “if a computer can role play as a human role playing an elf and convince the group”). But even so, considering the interplay of gaming with the development of AI, through the conversations humans are having about both, we see interesting interactions beyond just people wondering at the optimising learning being performed by the AI involved. Afterall, what is more fantastical – even more so, according to that one Redditor, than an anime story about the spirit of a long dead Go player inhabiting the body of a boy – than a mysterious AI appearing online and defeating humans at thier own game? That fascination led some reports of Google DeepMind’s acknowledgement that AlphaGo was the AI player to state that: “Humans Mourn Loss After Google is Unmasked as China’s Go Champion” There is a touch of Sci-Fi to that story, but happening in the real world, a sense that there is another game going on behind the scenes. That it was a familiar player, AlphaGo, was disappointing.

And that tells us more about the collaborative games and stories that humans create together, in the real world, when it comes to Artificial Intelligence.