Although I am usually tongue tied and fluttery of stomach when I do public talks, I do definitely enjoy the opportunity to engage an audience with questions around AI and robotics. As an anthropologist, I am trained in watching reactions in assemblies of communities; being a research instrument that interrogates a field site of informants, But often it is when I am the one being interrogated by the audience during the Q&A when I have the best moments of understanding and inspiration. Finding out what people want to ask about is about more than just finding out what is weighing heavily on their mind. It is also about learning how their own unique mind has approached a particular topic. For instance, a couple of weeks ago I was speaking at the Hay Festival, my first time there, and a 10 year old boy in the audience asked a question that showed me how his mind was thinking things through.

He asked, quite simply it seemed at first, would robots, as they became smarter, feel the effects of the Uncanny Valley in relation to humans?

If you aren’t familiar with Masahiro Mori’s theory then there is a good summary, with some skin shivering examples, here

Initially, I thought perhaps he meant that as we towards anthropoid robots that look like us but not quite enough, so that they fall into that Valley, the opposite might also be true. In this future we will look like them, but perhaps not quite enough. And in fact, if they have cogniscence of their artificial skin, then it is likely that our squishy skin would evoke an ick factor for them too. This understanding of the Uncanny Valley does however rely on, as the above graph does, the assumption that the Uncanny Valley response to artificial beings is an evolutionary by product of historical wariness around the strange looking ‘Other’ who might be strange because they are diseased – a kind of pathogen avoidance. This kind of evolutionary psychology can make some people ‘itchy’, as one scientist told me when I recounted the boy’s question to him and this summary of the Uncanny Valley after my talk. There are other explanations for the Uncanny Valley; such as the fear of the momentor mori – the artificial other as a blank eyed doll reminding us of our future dead state. And we can see the corpse and the zombie deep down in the Uncanny Valley in the above graph (the moving zombie being more Uncanny/Unfamiliar than the still corpse).

When I thought more on the boy’s question later on I began to wonder if he was solely asking about anthropoid robots, or whether he was considering an Uncanny Valley effect when we talk about intelligences: ‘smarter’ robots would also potentially be like us, but not us, in terms of intelligence. Murray Shanahan’s typology of ‘Conscious Exotica’ goes some way in thinking about these other kinds of intelligence, but as I’ve written elsewhere, he doesn’t take into account the strong effect of anthropomorphism in his understanding of ‘like-ness’ to us. Putting anthropomorphism to one side for a moment, would we encounter ‘Uncanny’ reactions when faced with such intelligences, including of course, AI? The science fictional encounter with the robot, and even the alien, is of course a working out in literature of our reactions to encounters with other minds. Having just seen Alien: Covenant I can confirm that there is certainly something very Uncanny about David, Walter, and of course, the various iterations of the Xenomorph. Physically this is obvious; its there in the way the androids look and talk, and in how the Xenomorph is biologically ‘icky’ as well as literally pathogenic. And we’d certainly want to avoid it, evolutionary psychology, or not! But Science Fiction has long tried to show us how we might encounter alien intelligences that are like us but not quite enough, as in War of the Worlds:

“No one would have believed in the last years of the nineteenth century that this world was being watched keenly and closely by intelligences greater than man’s and yet as mortal as his own; that as men busied themselves about their various concerns they were scrutinised and studied, perhaps almost as narrowly as a man with a microscope might scrutinise the transient creatures that swarm and multiply in a drop of water.”

We might read this and think that they are like us – as we produce scientists as well – but Wells is talking about the distance in intelligence between us and them. We are as the creatures in the water in relation to their intelligence.

Returning to a hypothetical future of ‘smart robots’, by which I take to mean some level of near equivalent intelligence to our own, would this difference not also impact from the other direction? Would we also seem Uncanny to the robot?

After having my feet taken away from under me by this insightful twisting of our understanding of where we stood and where the ‘Other’ stood, I likely gave an all too quick agreement. But in recent days I’ve prepared a talk with some colleagues for scientists on the social scientific method, specifically on qualitative methods such as ethnography, and I’ve returned to the boy’s question as I’ve reconsidered the role and presence of the ethnographer.

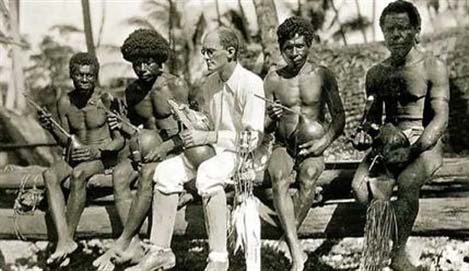

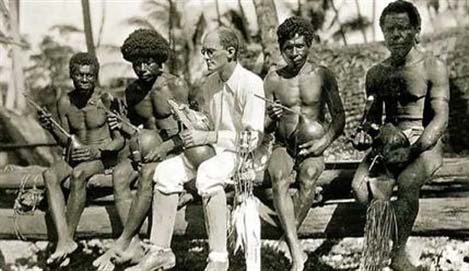

Malinowski among the Trobrian Islanders knew that he was different to them. There were obvious visual queues, as in the above picture, whereby his origins and cultural approach were clear. And yet, his style of ethnography, which gave us the first steps towards the participant observation method, still relied on an older style of writing that formalised the ethnographer as a player in the field, without full self-reflexivity about presuppostions, power relations, and emotional context. The later posthumous publication of his diaries of this time have given us a much more rounded view of the man who described himself epithetically in his ethnography as a ‘a Savage Pole’, and they have also led more recent ethnographers to address themselves as informants in the field, just as much as the subjects of their study are.

Did Malinowski encounter the Uncanny Valley in relation to the Others of his field, and vice versa? Physical and aesthetic differences aside (although those are very natty socks he’s wearing) did differences in mind also incur the Uncanny effect during his fieldwork? On the whole, the modern ethnographer presumes a difference in intelligence when entering the fieldsite – not a quantifiable difference of IQ but a difference of perspective as intelligence is a multi-faceted quality, not just quantity. And when we move into a field where the informant is, on the surface, not that dissimilar to us, such as when I researched modern Western New Agers who were of similar backgrounds and demographics, ethnographers are encouraged to be careful not to assume that that we see the world in the exact same way. Our use of language might well differ even if we are speaking what sounds like the same mother tongue. And that difference and distance is necessary for both understanding and self-reflection in the field. Feeling the Uncanny, although we would not term it such, is a way into deeper consideration for the ethnographer.

Which brings me to where the boy’s question finally brought me. If smart robots felt the Uncanny in relation to humans, could there be a robot ethnographer? An immediate response would point to how well a robot could potentially be camouflaged in order to observe a field. We see this already in the use of remotely controlled camera made up as rocks or animals for the observation of animal communities. Some are perhaps more convincing than others…

However, the contemporary ethnographer does not seek to sneak into a community and just observe (although, there are instances where ethical frameworks about how ethnographers must introduce themselves would be prohibitive for research into criminal communties an subterfuge might be safer). On the whole, the contemporary ethnographer intends to observe and participate, while remaining aware of their influence on the environment in a way that the robot penguin above does not.

There is also the presumption in current predictions for AI that smart robots would be positivistic, as that is how they have been built, and ethnography, as I tried to explain to the scientists, is not a positivistic approach. It is phenomenological – it looks to experiences and explores the ‘capta’, what is lived, not the ‘data’, what is thought. Presumably a robot ethnographer would have to have an experience of experience. And is that ever going to be possible for an robot ethnographer? Likewise, or conversely, any human attempting an ethnography of robots would need to find the capta of the robot. Multi-species ethnography appears to be an emerging field, but focuses on the more obviously foregrounded evidence of interactions between animals fates and the social world humans, ie. when pollution leads to the explosion of another population suddenly benefitting from a niche taken away from another species. A multi-species ethnography including the robotic at the moment would still consider the human influence on the development of the robot rather than their own social worlds. I am, in my own small way, thinking about this element, although I would still not call myself an ethnographer of robots. Or a robographer. Or a robopologist. Susan Calvin retains the crown as the first proper robo-social scientist as a psychologist. In fiction at least.

Although, there is certainly scope for the development of a wider anthropology of intelligences that takes on board the work of theorists like Donna Haraway, who remarks that, “If we appreciate the foolishness of human exceptionalism, then we know that becoming is always becoming with—in a contact zone where the outcome, where who is in the world, is at stake” (When Species Meet, 2008:244). This contact zone involves reactions like the Uncanny Valley, and for the ethnographer addressing the areas where we find the jarring, the Uncanny, the weird, is a way into understanding and ‘writing about people’, the literal meaning of ethnography, however we choose to define ‘people’. The robot ethnographer is a nice little thought experiment that can get us into questions around intelligence, experience, and power relations.